A3c

https://stalljust.se/

【5分講義・深層強化学習#3】今ホットなa3cアルゴリズム .. A3Cは、複数のエージェントが非同期に学習することで、強化学習の学習のアプローチの一つです。非同期学習の詳細と利点、A3Cの難点、A3Cの後継としてのA3C-VAEアルゴリズムについて解説します。

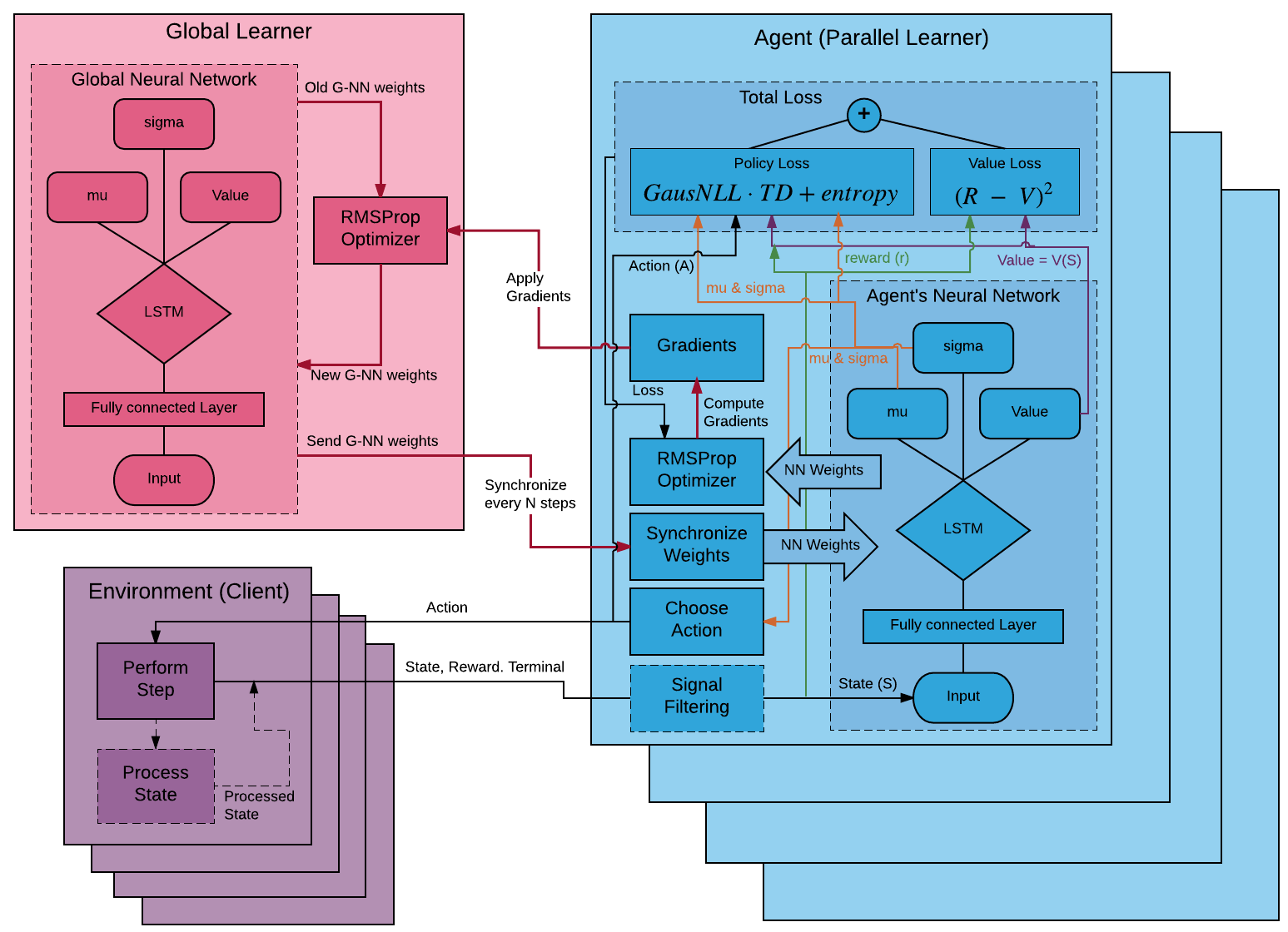

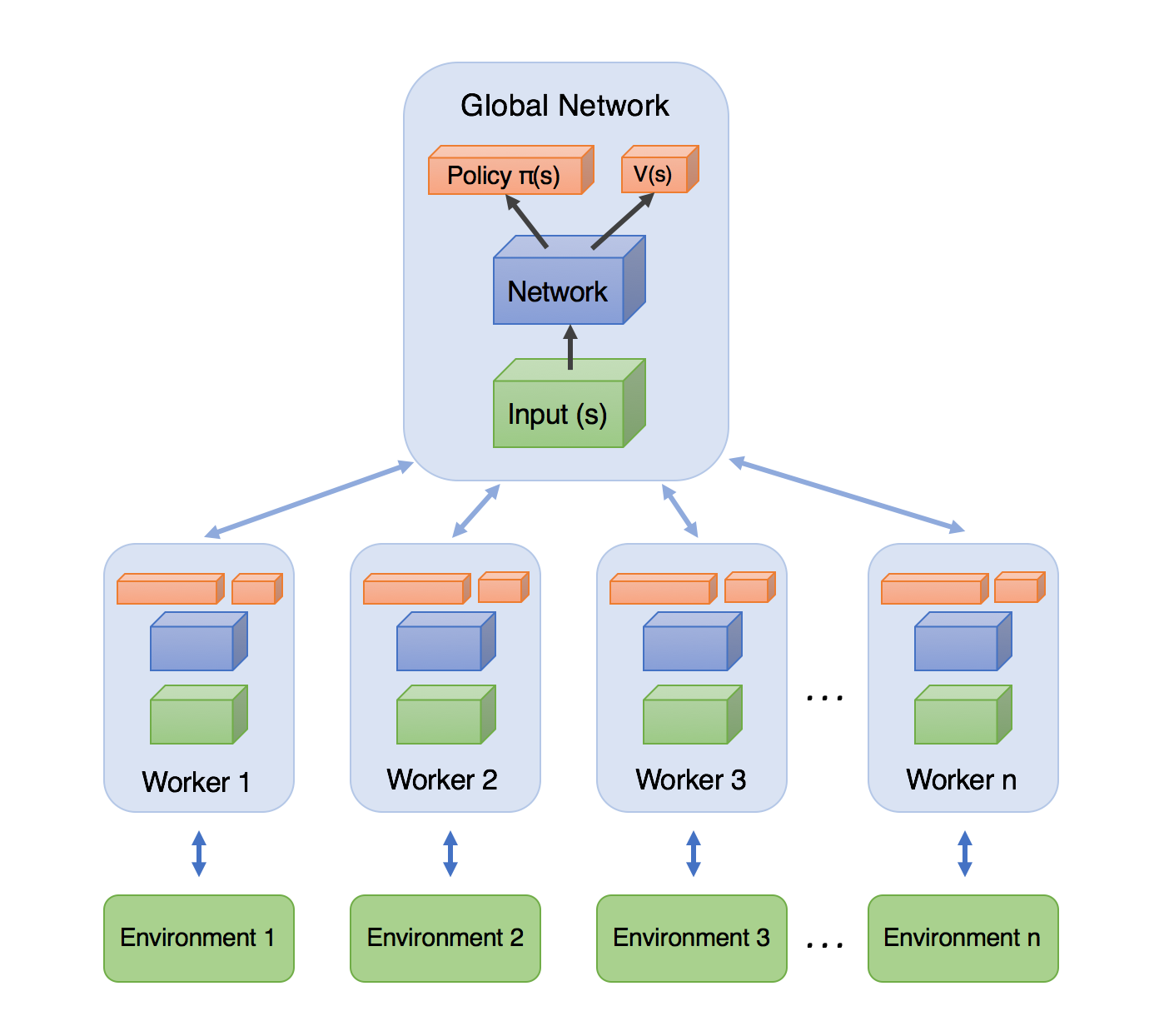

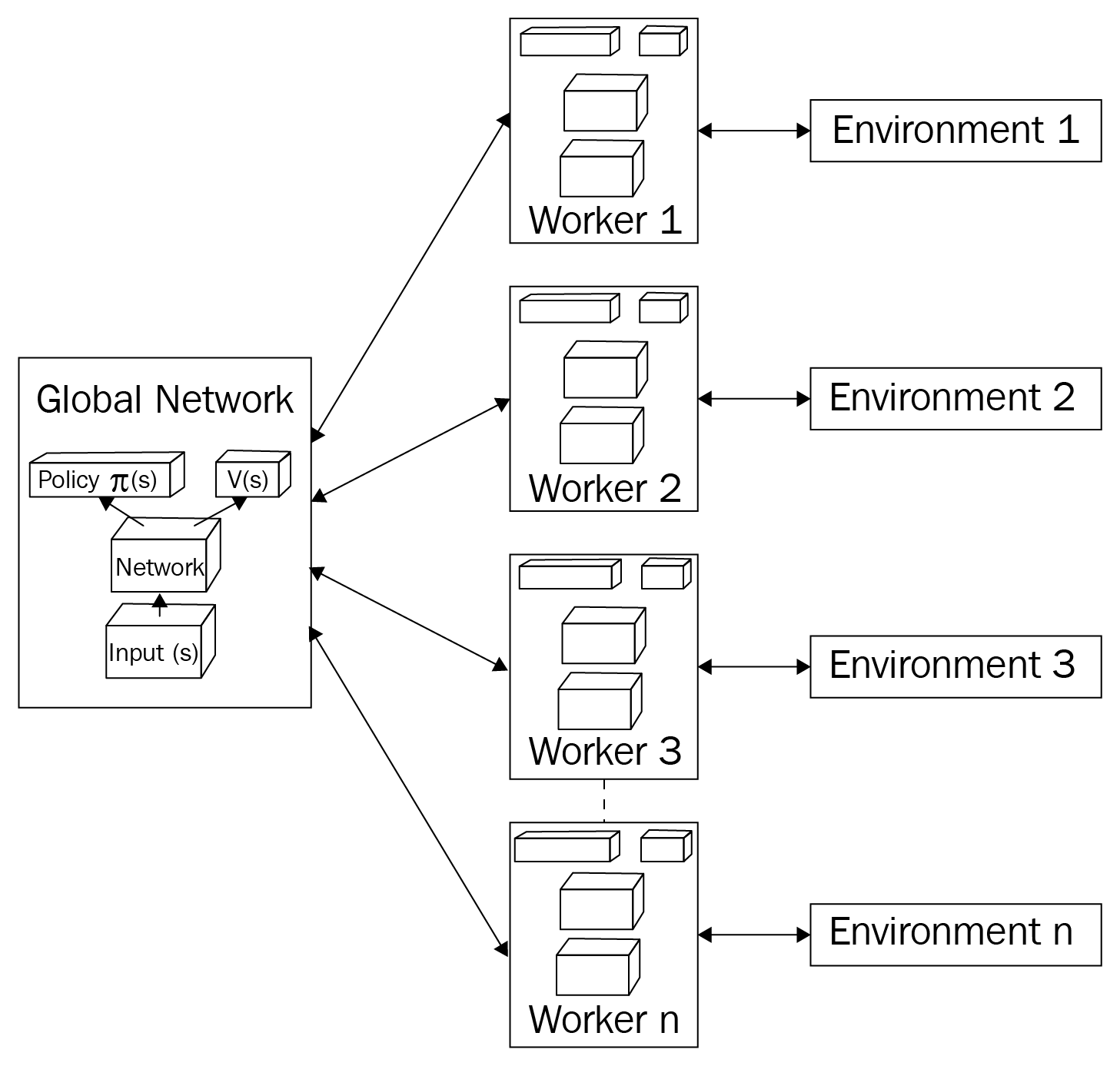

. 【強化学習】実装しながら学ぶA3C【CartPoleで棒立て:1 .. A3Cとは「Asynchronous Advantage Actor-Critic」の略称です。 強化学習におけるA3Cの立ち位置を紹介します。 強化学習の分野は、ディープラーニングを取り入れた強化学習である「DQN」が2013年に発表され、大きく進展しました。. 【5分講義・深層強化学習#4】A3cの手法の中身と性能を理解 .. A3Cは分散型のActor-Criticネットワーク構造を使ったエージェントの非同期学習で、高速化と安定化の効果があります。この記事では、A3Cの学習法の仕組み、ロス関数、ハイパーパラメータ、性能検証の結果を詳しく解説し、他の深層強化学習の手法と比べた性能をお伝えします。. DQNを卒業してA3Cで途中挫折しないための7Tips #Python - Qiita. A3CはAsynchronous Advantage Actor-Criticの略で、2016年にあのDQNでおなじみのDeepMind社が発表した、 早い:AsynchronousかつAdvantageを使って学習させるので、学習が早く進む!. 【強化学習】分散強化学習を解説・実装(GORILA・A3C・Ape .. A3C 論文:Asynchronous Methods for Deep Reinforcement Learning アーキテクチャのみ取り上げます。A3C/A2Cは別の記事を上げる予定です。 A3Cは基本 GORILA と同じですが、以下の変更点があります。 パラメータサーバとLearner. [1602.01783] Asynchronous Methods for Deep Reinforcement .. A paper that proposes a framework for deep reinforcement learning using asynchronous gradient descent for optimization of deep neural network controllers. The paper presents asynchronous variants of four standard reinforcement learning algorithms and shows their performance on various tasks, such as Atari, motor control and 3D mazes.. PDF Asynchronous Methods for Deep Reinforcement Learning .. A3C is a variant of actor-critic reinforcement learning that uses asynchronous gradient descent to train deep neural network controllers. The paper presents the design, results and advantages of A3C on various domains and tasks, such as Atari 2600, continuous motor control and 3D mazes.. A3CにおけるAttention機構を用いた視覚的説明 - J-STAGE. 著者関連情報

. 【深層学習】A3C(強化学習)とは? | 意味を考えるblog a3c. 「A3C」は強化学習の学習法の一つでその特徴は複数のエージェントが同一の環境で非同期に学習する手法ですが、よく理解できないケースが非常に多いです。ここでは、「A3C」について要点を解説します。. A3c 押ボタンスイッチ(照光・非照光)(丸胴形φ12)/特長 .. A3C A3C 押ボタンスイッチ(照光・非照光)(丸胴形φ12) 胴体長20mm、丸胴形φ12シリーズ 形式一覧 / オンライン購入 ※ Web特別価格提供品 ログインで価格を確認 カートを見る この商品について マイカタログに 追加 関連情報 .. A3C Explained | Papers With Code. A3C is a policy gradient algorithm that updates the policy and the value function after every $t_{text{max}}$ actions or when a terminal state is reached. It uses a mix of n-step returns to estimate the advantage function and syncs the critics with global parameters every so often. See the paper, code, results and components of A3C on Papers With Code.. A3c——一种异步强化学习方法 - 知乎. A3C是Google DeepMind 提出的一种解决Actor-Critic不收敛问题的算法。我们知道DQN中很重要的一点是他具有经验池,可以降低数据之间的相关性,而A3C则提出降低数据之间的相关性的另一种方法:异步。. ゼロから作る A3C #強化学習 - Qiita. A3C は離散的な行動空間を伴う強化学習課題についても、連続的な行動空間を伴う強化学習課題についても、どちらにも利用することができるため、今回の実装では発注量を整数値単位で決定する (つまり、行動空間 $={0,1,2,ldots,99. PyTorchでA3C - rarilureloの日記 a3c. 目次 目次 PyTorchについて Pythonのmultiprocessing A3C 実装 結果 今回のコードとか あとがき PyTorchについて Torchをbackendに持つPyTorchというライブラリがついこの間公開されました. PyTorchはニューラルネットワークライブラリの中でも動的にネットワークを生成するタイプのライブラリになっていて, 計算 .. 深層強化学習アルゴリズムまとめ #機械学習 - Qiita. 後で紹介するA3Cという有名なアルゴリズムの元となっています. 以降の手法では分散処理による高速化が主流となります a3c. Ape-X 優先度付き経験再生を分散処理で高速化したアルゴリズムです. DQN版の他に決定方策勾配法(DPG)版もあり. 強化学習(Advantage Actor-Critic)はFX取引できるか(基礎). A3Cの構築は次のグラフ(左)で示しています。A3Cで複数のAgentはそれぞれの環境(Env)を探索してニューラルネットワークを学習していきます。学習完了後、それぞれのパラメータの勾配情報をマスター各のネットワーク関数に非同期に a3c. OpenAI Baselines: ACKTR & A2C a3c. Were releasing two new OpenAI Baselines implementations: ACKTR and A2C. A2C is a synchronous, deterministic variant of Asynchronous Advantage Actor Critic (A3C) which weve found gives equal performance. ACKTR is a more sample-efficient reinforcement learning algorithm than TRPO and A2C, and requires only slightly more computation than A2C per update.. About | A3C Collaborative Architecture. At A3C, we collaborate with our clients to arrive at designs that will work best for them, and we focus on making our designs as sustainable as possible.. uvipen/Super-mario-bros-A3C-pytorch - GitHub a3c. Asynchronous Advantage Actor-Critic (A3C) algorithm for Super Mario Bros Topics python mario reinforcement-learning ai deep-learning pytorch gym a3c super-mario-bros

. The APOBEC3C crystal structure and the interface for HIV-1 Vif binding .

. The A3C structure has a core platform composed of six α-helices (α1-α6) and five β-strands (β1-β5), with a coordinated zinc ion that is well conserved in the cytidine deaminase .. Beyond DQN/A3C: A Survey in Advanced Reinforcement Learning a3c. Subsequently, DeepMinds A3C (Asynchronous Advantage Actor Critic) and OpenAIs synchronous variant A2C, popularized a very successful deep learning-based approach to actor-critic methods a3c. Actor-critic methods combine policy gradient methods with a learned value function. With DQN, we only had the learned value function — the Q-function .. Asian & Asian American Center | Student & Campus Life - Cornell University. The mission of the A3C is to acknowledge and celebrate the rich diversity that Asian Pacific Islander Desi American (APIDA) students bring to Cornell and to actively foster a supportive and inclusive campus community a3c. As a unit within the Dean of Students Centers for Student Equity, Empowerment, and Belonging, we create and promote positive .. Reinforcement Learning and Asynchronous Actor-Critic Agent (A3C .. The A3C algorithm is one of RLs state-of-the-art algorithms, which beats DQN in few domains (for example, Atari domain, look at the fifth page of a classic paper by Google Deep Mind) a3c. Also, A3C .. Obtención de Licencia de Conducir: Categoría A-3-c - DePeru.com. Lince: Av. César Vallejo Nº 603. Cercado de Lima: Calle Antenor Orrego Nº 1923. El horario de atención es de lunes a viernes de 8:30 a.m a3c

. pull:把主网络的参数直接赋予Worker中的网络. push:使用各Worker中的梯度,对主网络的参数进行更新 a3c. A3C代码的实现 . a3c. 강화학습 알아보기(4) - Actor-Critic, A2C, A3C · greentecs blog. 그림으로 나타낸 A3C의 구조 a3c. 각 에이전트는 서로 독립된 환경에서 탐색하며 global network 와 학습 결과를 주고 받습니다. 이미지 출처 a3c. 그림 7에서 볼 수 있듯이 A3C 는 A2C 에이전트 여러 개를 독립적으로 실행시키며 global network 와 학습 결과를 주고 받는 구조입니다.. PDF REINFORCEMENT LEARNING WITH UNSUPERVISED AUXILIARY TASKS - arXiv.org. (a) Base A3C Agent Figure 1: Overview of the UNREAL agent. (a) The base agent is a CNN-LSTM agent trained on-policy with the A3C loss (Mnih et al., 2016). Observations, rewards, and actions are stored in a small replay buffer which encapsulates a short history of agent experience. This experience is used by auxiliary learning tasks. (b) Pixel a3c. a3c · GitHub Topics · GitHub. Star 7.6k. Code. Issues. Pull requests. 强化学习中文教程(蘑菇书),在线阅读地址: atawhalechina.github.io/easy-rl/ a3c. reinforcement-learning deep-reinforcement-learning q-learning dqn policy-gradient sarsa a3c ddpg imitation-learning double-dqn dueling-dqn ppo td3 easy-rl

. Updated last month.. Aircrew training is A3s priority in AMC - Air Mobility Command. In fact, of the 11 divisions within the directorate, there are five primarily focused on readiness and training. These divisions include A3T, A3R, A3D, A3C and A3Y. In the past year, the directorate established A3Y, an exercise division that executes the commanders latest training guidance said Col. Michael Zick, A3 deputy director of operations.. PDF GE-R Male stud connector - Parker Hannifin Corporation. Series 5 TD3D4L1L2L3L4S1S2g/1 piece Order code* CF A3C 71 MS L3)06G1/8A 4 14 23.5 8.5 8 23.0 14 14 14 GE06LR 315 315 315 200 06 G1/4A 4 18 29.0 10.0 12 25.0 19 14 60 GE06LR1/4 315 315 315 200 06 G3/8A 4 22 30.5 11.5 12 26.0 22 14 45 GE06LR3/8 315 315 315 200 06 G1/2A 4 26 33.0 12.0 14 27.0 27 14 60 GE06LR1/2 315 315 315. [2012.15511] Towards Understanding Asynchronous Advantage Actor-critic .. Asynchronous and parallel implementation of standard reinforcement learning (RL) algorithms is a key enabler of the tremendous success of modern RL. Among many asynchronous RL algorithms, arguably the most popular and effective one is the asynchronous advantage actor-critic (A3C) algorithm. Although A3C is becoming the workhorse of RL, its theoretical properties are still not well-understood . a3c. A2C Explained | Papers With Code. A2C, or Advantage Actor Critic, is a synchronous version of the A3C policy gradient method. As an alternative to the asynchronous implementation of A3C, A2C is a synchronous, deterministic implementation that waits for each actor to finish its segment of experience before updating, averaging over all of the actors a3c. This more effectively uses GPUs due to larger batch sizes.. PDF GE-R-ED Male stud connector - Parker Hannifin Corporation a3c

. Stahl, zinc yellow plated A3C GE18LREDOMDA3C NBR Stainless Steel 71 GE18LREDOMD71 VIT Brass MS GE18LREDOMDMS NBR. DIN fittings I48 Catalogue 4100-5/UK GE-R-ED Male stud connector Male BSPP thread - ED-seal (ISO 1179) / EO 24° cone end D1 PN (bar)1) Weight. A3C: ArcelorMittal Columns Calculator - CESDb. The A3C software allows the designer to perform the detailed verification of a single steel member or a composite steel-concrete column according to the rules of the Eurocodes. Download structural analysis software A3C: ArcelorMittal Columns Calculator 2.99 developed by CTICM.. 强化学习(十三 )--ac、A2c、A3c算法 - 知乎 - 知乎专栏. 另外一种是异步的方法,所谓异步的方法是指数据并非同时产生,A3C的方法便是其中表现非常优异的异步强化学习算法。 A3C模型如下图所示,每个Worker直接从Global Network中拿参数,自己与环境互动输出行为。利用每个Worker的梯度,对Global Network的参数进行更新。

. +Sealing +Sealing A3C A3C D1 Sealing PN (bar) Sealing PN (bar) Sealing PN (bar) Sealing PN (bar) Weight Series 5 T1 L1 S1 NBR FKM NBR FKM g/1 piece LL 04 M8×1 11.0 10 — — — .. A3C: We add entropy to the loss to encourage exploration #34 - GitHub. A3C is an on-policy algorithm for the most part (workers getting out of sync makes data a bit off-policy), and it wont deal very well with off-policy data a3c

. Notice how entropy promotes an exploration strategy that is part of the stochastic policy, its baked into the A3C policy. Entropy is just encouraging the policy to stay stochastic, but it .. Keras深度强化学习--A3C实现 - 简书. Keras深度强化学习--A3C实现. A3C算法是Google DeepMind提出的一种基于Actor-Critic的深度强化学习算法。A3C是一种轻量级的异步学习框架,这种框架使用了异步梯度下降来最优化神经网络,相对于AC算法不但收敛性能好而且训练速度也快。. 在DQN、DDPG算法中均用到了一个非常重要的思想经验回放,而使用经验 . a3c. Learn Reinforcement Learning (4) - Actor-Critic, A2C, A3C. A3C. A3C stands for Asynchronous Advantage Actor-Critic a3c. Asynchronous means running multiple agents instead of one, updating the shared network periodically and asynchronously. Agents update independently of the execution of other agents when they want to update their shared network a3c. Figure 7. Structure of A3C shown in the figure.. PDF 4091-1/D Ermeto Original - Parker Hannifin Corporation. GE 10 L M A3C DIN 2353— C L 10 B— St Kurzzeichen Verschraubungsform (z.B. Gerade Einschraubverschraubung GE) Rohr AD (z.B. Rohraußendurchm. (10 mm) Reihe (z.B. leicht L) Einschraubgewinde (z.B. metrisches Einschraubgewinde M) Ausführung (z.B a3c. A3C verzinkt gelb chromatiert) Komplette Verschraubung nach ISO. ACC Leadership - AF. Command Staff Leadership. CG - Air National Guard Forces Assistant to COMACC - Maj. Gen a3c. Floyd Dunstan. CR - AF Reserve Mobilization Assistant to COMACC - Maj. Gen. John Breazeale a3c. DS - Director of Staff - Col David R. Gunter a3c. IA - Political Advisor - Mr. Thomas K. Gainey.. Deep Reinforcement Learning: Playing CartPole through . - TensorFlow. At a high level, the A3C algorithm uses an asynchronous updating scheme that operates on fixed-length time steps of experience. It will use these segments to compute estimators of the rewards and the advantage function. Each worker performs the following workflow cycle: Fetch the global network parameters a3c. A3C-based Computation Offloading and Service Caching in Cloud-Edge . a3c

. This paper jointly considers computation offloading, service caching and resource allocation optimization in a threetier mobile cloud-edge computing structure, in which Mobile Users (MUs) have subscribed to Cloud Service Center (CSC) for computation offloading services and paid related fees monthly or yearly, and the CSC provides computation services to subscribed MUs and charges service fees ..